Putting the Swarm to Work

Last weekend I built a docker swarm using an ansible role and deployed elasticsearch, mongodb and graylog into it, it worked but was useless because no way of ingesting data was defined. This week I added haproxy and rsyslog containers to de swarm in order to be able to ingest data. Docker swarm allows exposing new ports on containers dinamically, but as listening ports on graylog are defined by users at application level, they aren’t exposed without the intervention of an administrator.

Deploying HAProxy

I’ve deployed HAProxy in front of several graylog nodes many times, including deploying it using docker-compose, but for a swarm, I looked for more information and found a detailed blog page from HAProxy called HAProxy on Docker Swarm: Load Balancing and DNS Service Discovery. It served me as a guide, not only for deploying HAProxy, but for gaining a better understanding of swarm’s networking model, because that blog entry delved into it, comparing the three modes HAProxy can be deployed.

The first step was to create a HAProxy configuration file based on the blog suggestions and my personal experience.

global

log fd@2 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

stats socket /var/lib/haproxy/stats expose-fd listeners

master-worker

resolvers docker

nameserver dns1 127.0.0.11:53

resolve_retries 3

timeout resolve 1s

timeout retry 1s

hold other 10s

hold refused 10s

hold nx 10s

hold timeout 10s

hold valid 10s

hold obsolete 10s

defaults

timeout connect 10s

timeout client 30s

timeout server 30s

log global

mode http

option httplog

frontend graylog_ui

bind *:9000

use_backend stat if { path -i /my-stats }

default_backend graylog_ui

backend graylog_ui

balance roundrobin

option httpchk GET /api/system/lbstatus

http-request set-header X-Forwarded-For %[src]

http-request set-header X-Graylog-Server-URL "http://%[req.hdr(Host)]/"

server graylogmaster graylogmaster:9000 check resolvers docker init-addr libc,none port 9000

server graylogslave3 graylogslave3:9000 check resolvers docker init-addr libc,none port 9000 backup

server graylogslave2 graylogslave2:9000 check resolvers docker init-addr libc,none port 9000 backup

listen graylog

mode tcp

bind *:12200-12300

balance roundrobin

option tcplog

option log-health-checks

option logasap

option httpchk GET /api/system/lbstatus

server graylogslave2 graylogslave2 check port 9000 resolvers docker init-addr libc,none

server graylogslave3 graylogslave3 check port 9000 resolvers docker init-addr libc,none

backend stat

stats enable

stats uri /my-stats

stats refresh 15s

stats show-legends

stats show-node

The blog page suggested copying the same file to all worker nodes into de same route and bindmount it into the container. As it was not supposed to be changed during service operation, and it was small I wanted to give the swarm’s configuration feature a try, it’s well explained at Docker Swarm’s doc Store configuration data using Docker Configs.

The corresponding service definition was:

haproxy:

image: haproxytech/haproxy-alpine:2.4

ports:

- published: 80

target: 9000

protocol: tcp

mode: host

- published: 8080

target: 9000

protocol: tcp

mode: host

networks:

- graylog

configs:

- source: haproxy

target: /usr/local/etc/haproxy/haproxy.cfg

mode: 0444

volumes:

- haproxy-socket:/var/run/haproxy/:rw

dns: 127.0.0.11

deploy:

mode: global

resources:

limits:

memory: 512M

cpus: "1"

Deploying rsyslog

The next step was deploying rsyslog, as the official rsyslog docker release in docker hub was 3 years old, I looked for a more recent one, and I found jbenninghoff/rsyslog-forwarder amongst other images, but at least he spent some time writing a readme for docker hub. I followed the same strategy and created a configuration for rsyslog.conf

$MaxMessageSize 64k

module(load="imudp")

input(type="imudp" port="514")

module(load="imtcp")

input(type="imtcp" port="514")

$ActionForwardDefaultTemplateName RSYSLOG_SyslogProtocol23Format

$ActionSendTCPRebindInterval 3000

*.* @@haproxy:12201

As configs are limited in size and this was a proof of concept, I removed all comments and all unused directives, and, as this was meant to run inside a container, I also removed the socket listener for local messages.

I added the ActionSendTCPRebindInterval to make sure it balances between nodes, but that was a best scenario assumption because I had no means of knowing to which haproxy instance are the messages going, so I could get unwanted East-West traffic.

The corresponding service on my compose file was:

rsyslog:

image: jbenninghoff/rsyslog-forwarder

ports:

- published: 514

target: 514

protocol: tcp

mode: host

- published: 514

target: 514

protocol: udp

mode: host

networks:

- graylog

configs:

- source: rsyslog

target: /etc/rsyslog.conf

mode: 0444

dns: 127.0.0.11

deploy:

mode: global

resources:

limits:

memory: 512M

cpus: "1"

Feeding the beast

Once everything was in place it was time to put some records into graylog. I used a nxlog container on my host machine reading a 2M lines text file. The first attempt failed at the very first second, because there wasn’t space available for elasticsearch indices and it hit the watermark almost instantaneously.

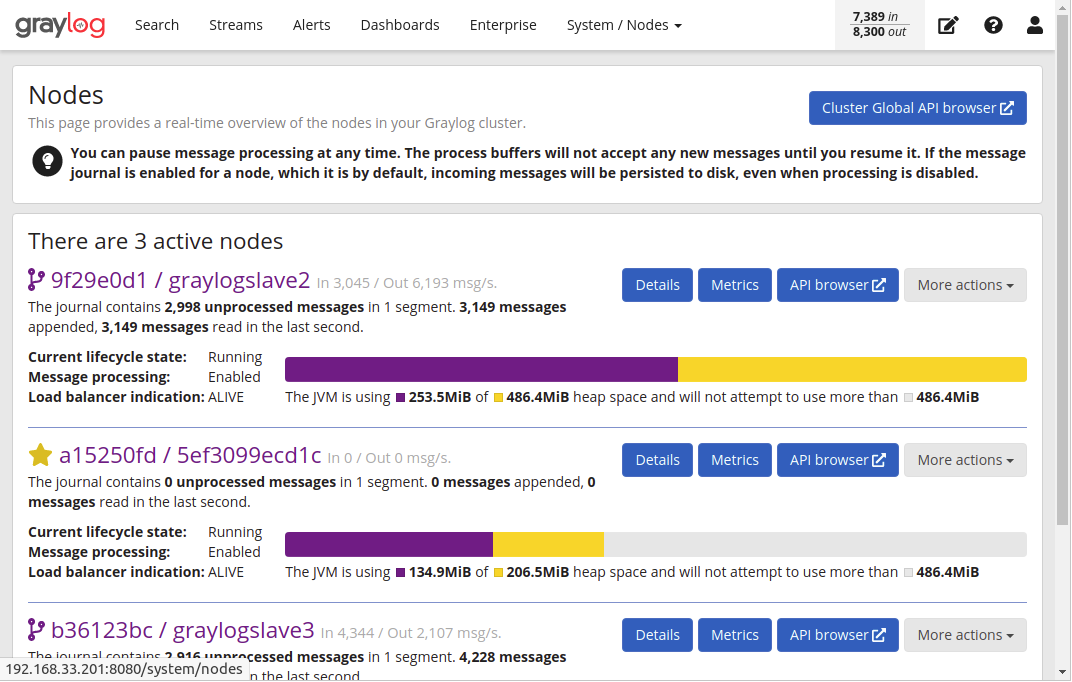

Once the storage on the data nodes was extended, I succeeded to ingest all the messages at an acceptable rate of about 8K messages per second.

But they weren’t shown at the search interface, I searched directly into the graylog_0 index using cerebro and they were there, so I need to do some troubleshooting.

Troubleshooting

Storing messages in a unretrievable storage may be considered a safe practice, but useless, so next time I’ll focus on why aren’t they being shown.