Deploying k8s on Oracle Linux 8

I’m a bit worried about the CentOS’ lifecycle policy change, which will do CentOS 7 last after CentOS 8 reaches its EOL, and it is said than a father shouldn’t bury his son. For my testings it’s not a problem, as I can get Red Hat EL8 licenses through the Red Hat developers network, but for the company I work for, it would be.

All members of my team are seasoned Linux Administrators, but we have been focused on CentOS for years, even some of us got some certifications like RHCSA and RHCE. The development team is switching to a container based approach for the upcoming releases of our SIEM, but we are still relying on a large set of Jenkins pipelines to build our rpm packages, so we are in need of an alternative for CentOS without leaving the RedHat family.

I read about Oracle Linux 8 lifecycle and it matches or even extends the lifecycle of RHEL 8, so it was worth a try, and the best test I thought of was deploying a kubernetes cluster over a bunch of machines.

Setting up the infrastructure

The first step was to deploy 5 virtual servers running Oracle Linux 8 on my lab environment, and, as I did before, I did it with Vagrant. My Vagrantfile was the same as the k8s with HA test except for the box image:

Vagrant.configure("2") do |config|

config.vm.box = "generic/oracle8"

config.vm.provision :ansible do |ansible|

ansible.playbook = "provisioning/provisioning.yml"

end

(1..5).each do |i|

...

I even kept my provisioning playbook which basically, creates my automation user and sets the ssh key for passwordless authentication. One can be tempted to do a lot of stuff on the provisioning phase, but that’s an error, because in this moment there is no parallelization of VM creation, so all tasks are going to be run sequentially.

Joe Miller, on his blog post Parallel provisioning for speeding up Vagrant proposed skipping the provisioning phase at Vagrant up time and parallelize the provisioning using a shell script. It’s a good option, but I’ve a better advice: use the vagrant provisioning to set up your automation user, and then use ansible as usual when all VMs are up.

Turning the architecture container-ready

Once VMs were up, it came the time to install the container runtime. Following Tony Mackay guide to install k8s on RHEL8 I decided to use cri-o as container runtime, and found the alvistack role very handy. This role required some other alvistack’s roles, so I got them using a slight variation of its galaxy-requirements.yml file.

collections:

- name: operator_sdk.util

src: https://galaxy.ansible.com

version: ">=0.0.0,<1.0.0"

roles:

- name: runc

src: https://github.com/alvistack/ansible-role-runc

version: develop

- name: crun

src: https://github.com/alvistack/ansible-role-crun

version: develop

- name: containers_common

src: https://github.com/alvistack/ansible-role-containers_common

version: develop

- name: containernetworking_plugins

src: https://github.com/alvistack/ansible-role-containernetworking_plugins

version: develop

- name: conmon

src: https://github.com/alvistack/ansible-role-conmon

version: develop

- name: cri-o

src: https://github.com/alvistack/ansible-role-cri_o

version: develop

Then I completed the installation of the container runtime with the suggested playbook.

- hosts: all

become: true

tasks:

- name: include role runc

include_role:

name: runc

tags: runc

- name: include role crun

include_role:

name: crun

tags: crun

- name: include role containers_common

include_role:

name: containers_common

tags: containers_common

- name: include role containernetworking_plugins

include_role:

name: containernetworking_plugins

tags: containernetworking_plugins

- name: include role conmon

include_role:

name: conmon

tags: conmon

- name: include role cri-o

include_role:

name: cri-o

tags: cri-o

None of the roles complained about the target machines, which were running Oracle Linux 8, so it helped me to assess the binary compatibility, thanks to the wonderful way OS detection and task inclusion is handled on the roles.

The next step was to install kubernetes packages: kubeadm, kubelet and kubectl, I used a slight variation of the playbook I used for my k8s HA practice, so I’m not going to include it here, but I’ll made it available on my github repo. The things I changed were the ones tightly tied to the OS, basically setting the route to the kubernetes segment.

Creating the cluster

I created the cluster running the kubeadm command by hand, exactly as I did on Ubuntu:

kubeadm init --upload-certs --control-plane-endpoint anthrax.garmo.local:6443 --apiserver-advertise-address 10.255.255.201

And again, after some minutes, my first control plane node was up and running.

....

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join anthrax.garmo.local:6443 --token 4c511s.XXXXXXXXXXXXXX \

--discovery-token-ca-cert-hash sha256:XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX \

--control-plane --certificate-key XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join anthrax.garmo.local:6443 --token 4c511s.XXXXXXXXXXXX \

--discovery-token-ca-cert-hash sha256:XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

Then I installed the weave network plugin.

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

Once my network plugin was installed, I installed two additional worker nodes using the proposed command, and it worked as a charm. After that I did the same for two additional control plane nodes, then my k8s cluster over oracle nodes was up and running.

Installing some charts

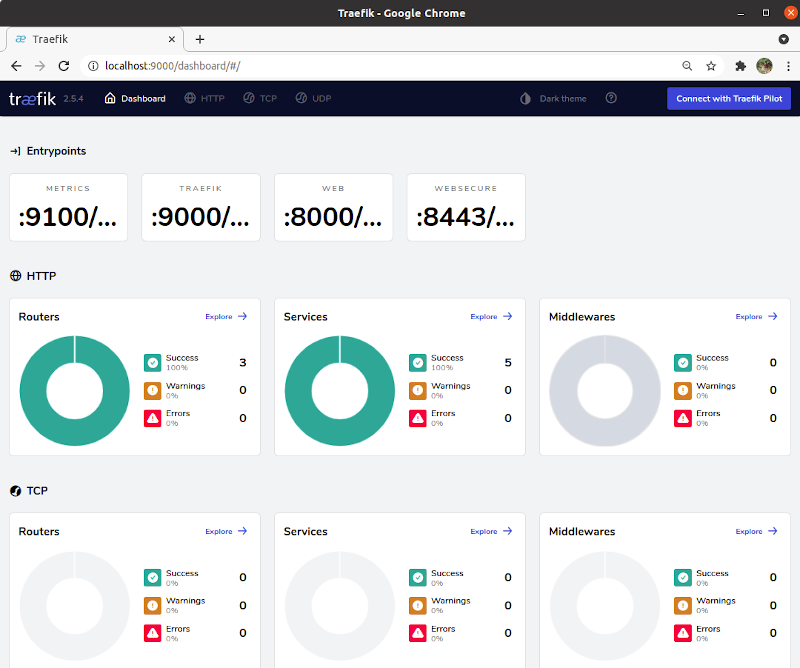

I though I got a deeper understanding of helm packages after playing a bit with tanzu packages, so I decided to try at least one on my cluster. As traefik was one of my first posts, I decided to try to install it from a helm chart, and the installation was pretty simple:

$ helm repo add traefik https://helm.traefik.io/traefik

$ k8s-oracle $ helm install traefik traefik/traefik

NAME: traefik

LAST DEPLOYED: Wed Dec 1 23:16:41 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

I had to forward the 9000 port to get to the dashboard as explained in the chart documentation, to get access to the dashboard:

kubectl port-forward $(kubectl get pods --selector "app.kubernetes.io/name=traefik" --output=name) 9000:9000

Installing an storage plugin

While I was testing tanzu I also learned about the “local” storage provisioner developed by rancher. This plugin allows the creation of local volumes making the scheduler aware of where were they created, instead of binding the pods to the nodes by hand. I installed it for using it on future tests:

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

namespace/local-path-storage created

serviceaccount/local-path-provisioner-service-account created

clusterrole.rbac.authorization.k8s.io/local-path-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

deployment.apps/local-path-provisioner created

storageclass.storage.k8s.io/local-path created

configmap/local-path-config created

As I was expecting, there were no issues during the installation.

Conclusions

After testing Oracle Linux 8, I think its a viable alternative to the CentOS lifecycle mesh. I’ve been reluctant to use Oracle Linux for years, but as we are being forced to move out of CentOS as LTS OS, I think I’m going to give it an opportunity.

References

- Crio installation instructions: https://github.com/cri-o/cri-o/blob/main/install.md#install-with-ansible

- Ansible role for installing cri-o: https://github.com/alvistack/ansible-role-cri_o

- A blog entry about parallelizing vagrant provisioning: https://dzone.com/articles/parallel-provisioning-speeding

- My homelab repo hosting involved files: https://github.com/juanjo-vlc/homelab/tree/main/k8s-oracle