Deploying my first cluster using kubeadm

After completing Mumshad Mannambeth’s Certified Kubernetes Administrator (CKA) with Practice Tests course it was time to deploy my first kubernetes cluster for practice. There was an easy (and recommended) way, using kubeadm, and a hard way: from scracth. I choose the easy, but there is a video playlist for those who want to give the hard way a try.

Considerations

- Most of the documentation was based on Ubuntu systems

- Ubuntu 20.04 LTS had a 5.4 kernel, ahead of CentOS’ 4.0.18 kernel

- Support of Docker will be removed on kubernetes 1.22

Thus I used Ubuntu 20.04 LTS this time, maybe I’ll try CentOS 8 Stream in the future, and I made the hard choice of using cri-o instead of container.d, maybe because I thought it was better because it was a CNCF incubating project supported by RedHat.

Base system

Again, I used Vagrant for provisioning the environment, almost the same configuration I used on my previous exercises, only a small change on my Vagrantfile to use a Ubuntu image:

config.vm.box = "peru/ubuntu-20.04-server-amd64"

config.vm.box_version = "20210701.01"

The ansible playbook used to provision the nodes with my automation user and corresponding ssh keys is the same as before.

I wanted to know every necessary step to bring up the cluster, so I ran away of ansible roles which set up the cluster, and made my own playbook.

The first step was to install prerequisites

- name: Install prerequisites packages

apt:

name:

- apt-transport-https

- curl

- ca-certificates

- gpg

state: latest

update_cache: yes

Then I had to load br_netfilter module, configure it to load at boot and tweak some kernel tunables to allow kubernetes network to work

- name: configure br_netfilter module

- name: load br_netfilter module

modprobe:

name: br_netfilter

state: present

- name: setup sysctl

sysctl:

name: "{{ item }}"

value: '1'

sysctl_file: /etc/sysctl.d/10-k8s.conf

reload: yes

loop:

- net.bridge.bridge-nf-call-ip6tables

- net.bridge.bridge-nf-call-iptables

- net.ipv4.ip_forward

The cri-o and kubernetes packages were spread among several repositories, I used variables to select software versions.

- name: Fetch google\'s key

get_url:

url: https://packages.cloud.google.com/apt/doc/apt-key.gpg

dest: /usr/share/keyrings/kubernetes-archive-keyring.gpg

checksum: sha256:ff834d1e179c3727d9cd3d0c8dad763b0710241f1b64539a200fbac68aebff3e

- name: Add google\'s apt repository

apt_repository:

repo: "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main"

filename: "kubernetes"

- name: Fetch Suse\'s libcontainers key

apt_key:

url: https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/xUbuntu_{{ ansible_distribution_version }}/Release.key

state: present

- name: Fetch Suse\'s cri-o key

apt_key:

url: https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:{{ crio_version }}/xUbuntu_{{ ansible_distribution_version }}/Release.key

state: present

- name: Add opensuse\'s apt repository

apt_repository:

repo: "deb https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/xUbuntu_{{ ansible_distribution_version }}/ /"

filename: "kubernetes"

state: present

- name: Add opensuse\'s cri-o apt repository

apt_repository:

repo: "deb http://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable:/cri-o:/{{ crio_version }}/xUbuntu_{{ ansible_distribution_version }}/ /"

filename: "kubernetes"

state: present

Once repositories were set, I installed the corresponding packages.

- name: Install kubernetes packages

apt:

name:

- cri-o

- cri-o-runc

- kubeadm={{ k8s_version }}-00

- kubectl={{ k8s_version }}-00

- kubelet={{ k8s_version }}-00

state: present

update_cache: yes

- name: Enable cri-o service

service:

name: cri-o

state: started

enabled: yes

On Running CRI-O with kubeadm documentation, it suggested using yq to edit the kubeadm configuration, so I also installed it.

- name: Install yq

get_url:

url: https://github.com/mikefarah/yq/releases/download/{{ yq_version }}/yq_linux_amd64

dest: /usr/local/bin/yq

mode: 0755

checksum: sha256:8716766cb49ab9dd7df5622d80bb217b94a21d0f3d3dc3d074c3ec7a0c7f67ea

As NAT interfaces are mandatory when deploying VMs using Vagrant, I created a drop-in override for the kubelet service in order to use the correct ip.

- name: Setup correct internalIp in kubelets

template:

src: ./templates/09-extra-args.conf.j2

dest: /etc/systemd/system/kubelet.service.d/09-extra-args.conf

notify: reload systemd

The contents of the jinja2 template were:

# {{ ansible_managed }}

[Service]

Environment=KUBELET_EXTRA_ARGS="--address={{ ansible_facts[internalip_iface]['ipv4']['address'] }} --node-ip={{ ansible_facts[internalip_iface]['ipv4']['address'] }}"

As I had my nexus repository configured as hub.docker.com registry mirror, I also took advantage of it.

- name: Setup custom registries

template:

src: ./templates/registries.conf.j2

dest: /etc/containers/registries.conf

backup: yes

when: 'local_registry is defined'

I only wanted to mirror (at the moment) docker.com registry, so the contents of the registries.conf jinja2 template were:

#{{ ansible_managed }}

unqualified-search-registries = ["{{ local_registry }}"]

[[registry]]

prefix = "k8s.gcr.io"

insecure = false

blocked = false

location = "k8s.gcr.io"

I made a big mistake and didn’t read the docs for creating a HA enabled cluster, so I kept this for future use and went along with not having HA.

- name: Fake a cluster load balancer for ha setups

lineinfile:

line: "10.255.255.201 apiserver.cluster.local"

path: /etc/hosts

Did I mention I was doing this on Ubuntu VMs? Let’s prevent mistakes for now.

- block:

# ...

# all above tasks were here

# ...

when: "ansible_distribution == 'Ubuntu'"

Deploying the cluster with kubeadm

I used the script from Running CRI-O with kubeadm documentation to generate a kubadm config file at /tmp/kubeadm.conf, but I had to perform additional changes on the file:

--- /tmp/kubeadm.conf.old 2021-08-23 14:26:55.769902995 +0000

+++ /tmp/kubeadm.conf 2021-08-23 14:27:42.577790034 +0000

@@ -9,7 +9,7 @@

- authentication

kind: InitConfiguration

localAPIEndpoint:

- advertiseAddress: 1.2.3.4

+ advertiseAddress: 10.255.255.101

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/crio/crio.sock

@@ -27,11 +27,12 @@

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

-kubernetesVersion: 1.21.0

+kubernetesVersion: 1.21.2

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

+ podSubnet: 10.32.0.0/12

scheduler: {}

cgroupDriver: systemd

---

I fixed the advertiseAddress to my vm address and kubernetes version to match my kubeadm version. And as I wanted to use the weave network plugin, I set the podSubnet to weave’s default subnet: 10.32.0.0/12

Then I ran the kubeadm in --dry-run mode to check if everything was in place.

[root@k8snode1]$ kubeadm init --node-name=controlplane --config /tmp/kubeadm.conf --dry-run

0823 14:28:08.977716 83693 strict.go:54] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeadm.k8s.io", Version:"v1beta2", Kind:"ClusterConfiguration"}: error unmarshaling JSON: while decoding JSON: json: unknown field "cgroupDriver"

[init] Using Kubernetes version: v1.21.2

[preflight] Running pre-flight checks

[preflight] Would pull the required images (like 'kubeadm config images pull')

[certs] Using certificateDir folder "/etc/kubernetes/tmp/

....

That threw 1124 lines of output, including a complaint about not recognizing the cgroupDriver property, but I thought it was ok as systemd was de default cgroup driver for cri-o.

Then I ran the command without the --dry-run option:

[root@k8snode1]$ kubeadm init --node-name=controlplane --config /tmp/kubeadm.conf

W0823 14:35:30.182996 85471 strict.go:54] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeadm.k8s.io", Version:"v1beta2", Kind:"ClusterConfiguration"}: error unmarshaling JSON: while decoding JSON: json: unknown field "cgroupDriver"

[init] Using Kubernetes version: v1.21.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [controlplane kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.255.255.101]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [controlplane localhost] and IPs [10.255.255.101 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [controlplane localhost] and IPs [10.255.255.101 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 20.504492 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node controlplane as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node controlplane as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.255.255.101:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ebd7518d5eed84695320bda99aa477f8edd1fc68f74bf15819d05a9799abc9cc

It installed the control plane components and then I used ansible to run the command to join the nodes to the cluster.

ansible 'cluster_managers,!k8snode1' -a "kubeadm join 10.255.255.101:6443 --control-plane --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:ebd7518d5eed84695320bda99aa477f8edd1fc68f74bf15819d05a9799abc9cc"

k8snode2 | FAILED | rc=1 >>

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'error execution phase preflight:

One or more conditions for hosting a new control plane instance is not satisfied.

unable to add a new control plane instance a cluster that doesn't have a stable controlPlaneEndpoint address

Please ensure that:

* The cluster has a stable controlPlaneEndpoint address.

* The certificates that must be shared among control plane instances are provided.

To see the stack trace of this error execute with --v=5 or highernon-zero return code

At this point I realized I made a mistake planning my cluster, no HA for me! 😭.

So in order to preserve resources, I disposed the additional control plane nodes. Then I joined the worker nodes

ansible 'cluster_workers' -a "kubeadm join 10.255.255.101:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:ebd7518d5eed84695320bda99aa477f8edd1fc68f74bf15819d05a9799abc9cc"

At first try I got the wrong InternalIP of the worker nodes because the default route is bound to the VirtualBox NAT interface, that was the reason to include the fix in the playbook.

root@k8snode1:/home/vagrant# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

controlplane Ready control-plane,master 5m19s v1.21.2 10.255.255.101 <none> Ubuntu 20.04.3 LTS 5.4.0-77-generic cri-o://1.21.2

k8snode4 Ready <none> 88s v1.21.2 10.0.2.15 <none> Ubuntu 20.04.3 LTS 5.4.0-77-generic cri-o://1.21.2

k8snode5 Ready <none> 88s v1.21.2 10.0.2.15 <none> Ubuntu 20.04.3 LTS 5.4.0-77-generic cri-o://1.21.2

I had to delete the nodes and recreate them with the fix applied:

root@k8snode1:/home/vagrant# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

controlplane Ready control-plane,master 7h50m v1.21.2 10.255.255.101 <none> Ubuntu 20.04.3 LTS 5.4.0-77-generic cri-o://1.21.2

k8snode4 Ready <none> 6h51m v1.21.2 10.255.255.104 <none> Ubuntu 20.04.2 LTS 5.4.0-77-generic cri-o://1.21.2

k8snode5 Ready <none> 7h v1.21.2 10.255.255.105 <none> Ubuntu 20.04.2 LTS 5.4.0-77-generic cri-o://1.21.2

The weave network plugin

One is supposed to install the network plugin before joining other nodes, I did it after but it seemed to work. I installed weave network addon for two reasons:

- It supported network policies, which flannel didn’t

- It was very easy to install

Running this command was what I did to install it:

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

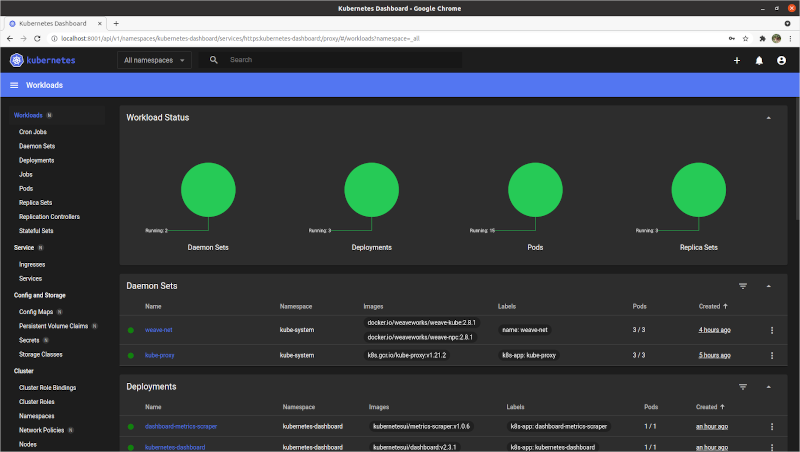

Installing kubernetes dashboard

Once having my cluster running I installed the kubernetes dashboard.

It was as simple as deploying a manifest from an url:

[root@k8snode1]$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

And created a service account following the instructions on Creating a sample user guide.

I used this definition file:

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

Then after getting the account’s token

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

I was able to access the dashboard running a kubectl proxy and an ssh tunnel through my control plane node using the url http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

NOTE: I also set up a service to expose the dashboard as a NodePort service, then I could access it directly at https://[any-node-ip]:30443/

The service definition to do that was:

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-fwd

namespace: kubernetes-dashboard

spec:

ports:

- port: 30443

nodePort: 30443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

type: NodePort

Conclusions

Even the course is supposed to be enough to pass the CKA exam if one masters the labs, I feel like I’m not ready yet. For the moment I had my first experience deploying a cluster.

The following exercises should be:

- Deploy an ingress controller

- Run some workloads

- Gain understanding of how storage works

- Deploy a high availability enabled cluster

References

- Mumshad Mannambeth’s Certified Kubernetes Administrator (CKA) with Practice Tests Course https://www.udemy.com/course/certified-kubernetes-administrator-with-practice-tests/

- CRI-O web page: https://cri-o.io/

- Running CRI-O with kubeadm: https://github.com/cri-o/cri-o/blob/master/tutorials/kubeadm.md

- Weave network addon: https://www.weave.works/docs/net/latest/kubernetes/kube-addon/

- Kubernetes dashboard: https://github.com/kubernetes/dashboard

- My homelab repo hosting involved files: https://github.com/juanjo-vlc/homelab/tree/main/k8s