Deploying Rancher

This weekend I tried Rancher Kubernetes Engine thanks to my colleague Juan who managed to create a couple of playbooks to deploy a Cluster Manager and a Managed Cluster from scratch. I’ve keen on trying Rancher for a long time, but I was distracted by lots of other things.

First things first, it’s been a long time since my last post, I know it and I’m sorry, I’ve changed jobs earlier this year, and I expended last year’s last quarter trying to learn about the technologies I’m expected to know on this job. I’ll talk about them soon, but for the moment, accept my apologies.

Creating the VMs

As I said before, this weekend I took advantage of my friend’s work: a rancher cluster manager deployment and a rancher managed cluster deployment using ansible. And, following his recommendations I created a set of 8 VMs, with 2 cpus and 4GiB of RAM each, all of them running Red Hat Enterprise Linux 8.5, thanks to the free developer licenses available at Red Hat’s developer network.

The Vagrantfile and the provisioning scripts are available at my homelab repo.

I tried to keep vagrant provisioning at minimum because it didn’t run in parallel. So after vagrant deployed the VMs with my automation user created, it was time to run the second stage of provision: registering the vms with red hat, and update the installed packages. Check the provisioning folder in the repository.

Time to run the rancher manager playbooks

I was warned the playbooks were provided “as is”, they were intended for personal use by its creator, but I accepted the challenge. He was a fond rancher user and wanted me to become one. So I promised him this weekend I would give them a try.

I had to run the rancher_manager_playbook playbook several times because some variables were missing their default values or were in the wrong place, but finally I managed to get it working. Once working I created a pull request with the fixes.

Once the playbook executed correctly, I was able to query de cluster using kubectl, but unable to access the web interface. After checking the namespaces were created, the pods were running, the services were pointing to the correct pods, I remembered there was an ingress, I checked it and then I saw the url I should have used to access the Rancher manager.

# kubectl get ingress --all-namespaces

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

cattle-system rancher <none> rnc-lab-mgmt.dev 192.168.123.201,192.168.123.202,192.168.123.203 80, 443 3h48m

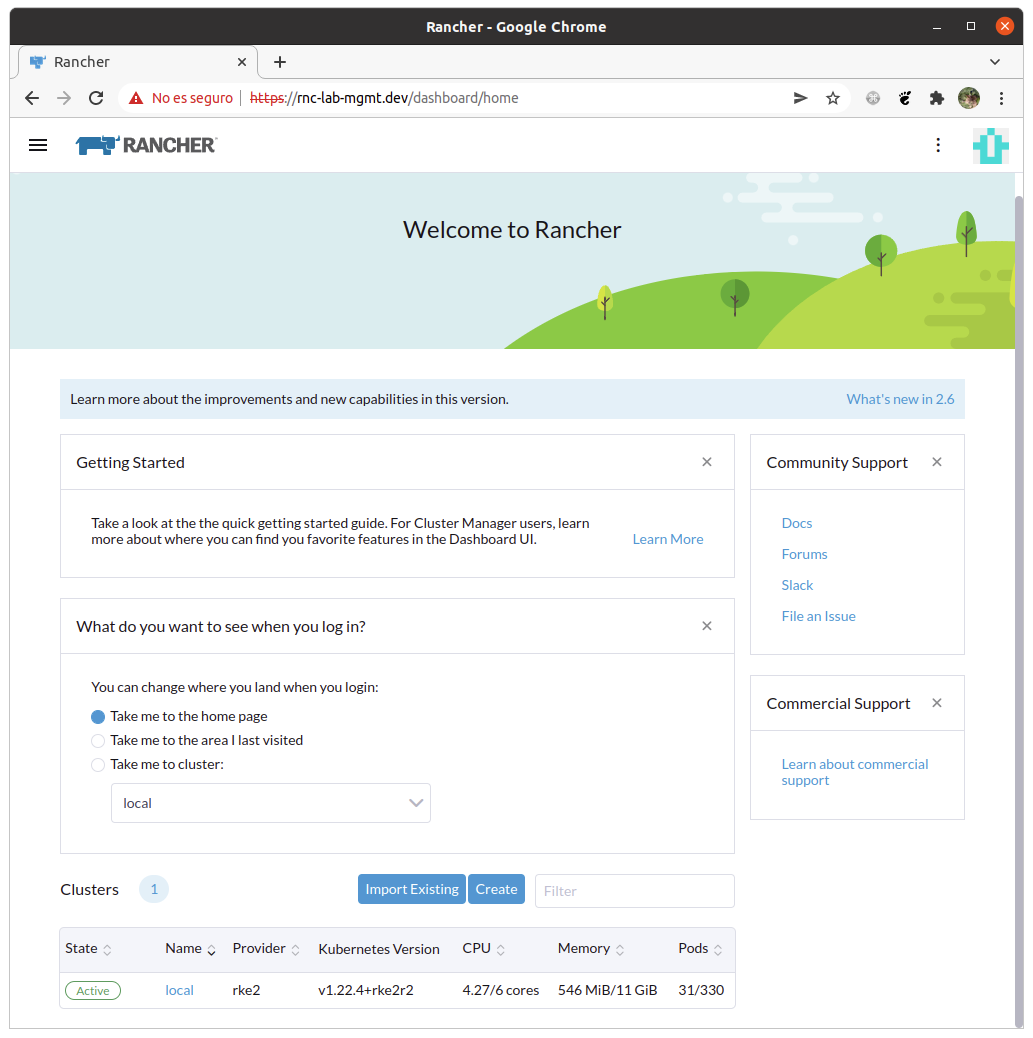

Once I pointed rnc-lab-mgmt.dev on my lan’s DNS server to my nginx load balancer, I got access to the web interface.

I ran the playbook as follows:

ansible-playbook -i inventory rancher_manager_playbook/site.yml -e @values.yml -l manager_cluster

And my values.yml content was:

# Should be in roles/nodes_preq/defaults/main.yml

helm_version: v3.5.3

helm_url: https://get.helm.sh/helm-{{ helm_version }}-linux-amd64.tar.gz

# Should be in roles/rnc-mgmt/defaults/main.yml

cluster_debugging_enabled: false

custom_k8s_cluster_rancher_replicas: 3

rancher_chart_release: "rancher-stable"

rancher_chart_url: "https://releases.rancher.com/server-charts/stable"

rancher_image_tag: "v2.6-head"

http_proxy: ''

no_proxy: ''

# Should have a default in roles/rke2/defaults/main.yml

rke2_type: server

# Customizable values for deployments

custom_k8s_cluster_rancher_host: rnc-lab-mgmt.dev

kube_api_lb_host: "{{ custom_k8s_cluster_rancher_host }}"

lbr_interface: eth1

lbr_ipv4: 192.168.123.229

rke2_cluster_group_name: manager_cluster

rke2_servers_group_name: masters

Creating a managed cluster

Once the manager cluster was created, I was ready to deploy a managed cluster. I ran the rancher_managed_playbook playbook and found some variables didn’t have defaults on the right place, but also a issue with the kubectl being outside of the root PATH, and therefore ansible was unable to launch it.

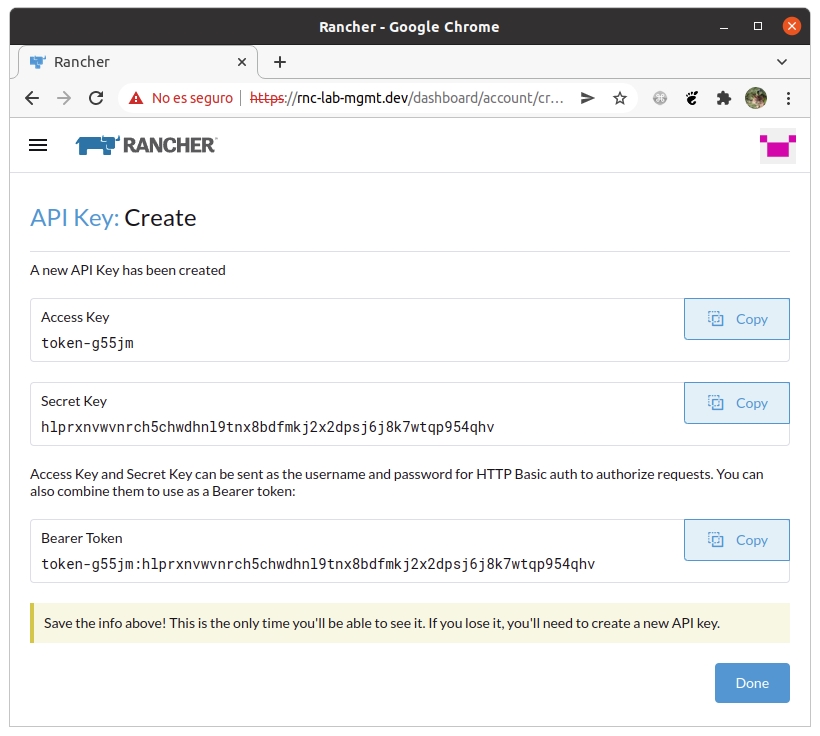

One thing I missed when visited the repository was an API token had to be generated prior launching the playbook. I generated it and passed as variable on my vars file.

The playbook invocation was:

ansible-playbook -i inventory-mc rancher_managed_playbook/site.yml -e @values-managed.yml

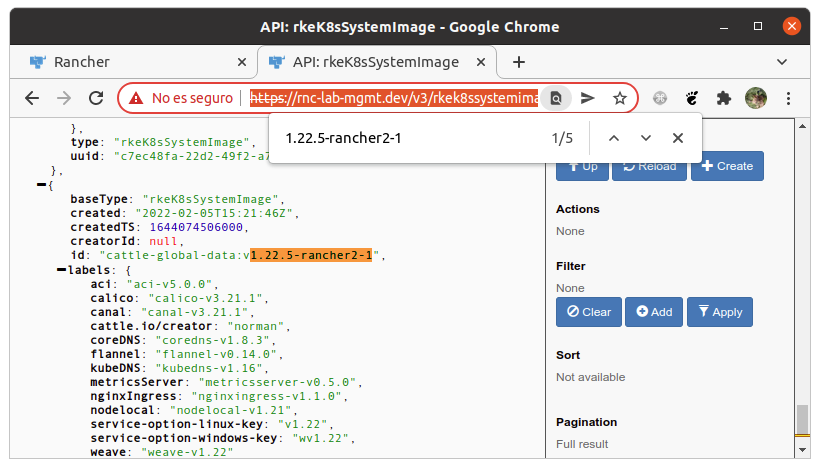

It looked like it was going to succeed, but it hanged trying to connect to the first deployed node with a HTTP 500 error, on the manager interface there was an error message complaining about being unable to find an specific kubernetes image version. I had to go to https://rnc-lab-mgmt.dev/v3/rkek8ssystemimages and find out the available image versions.

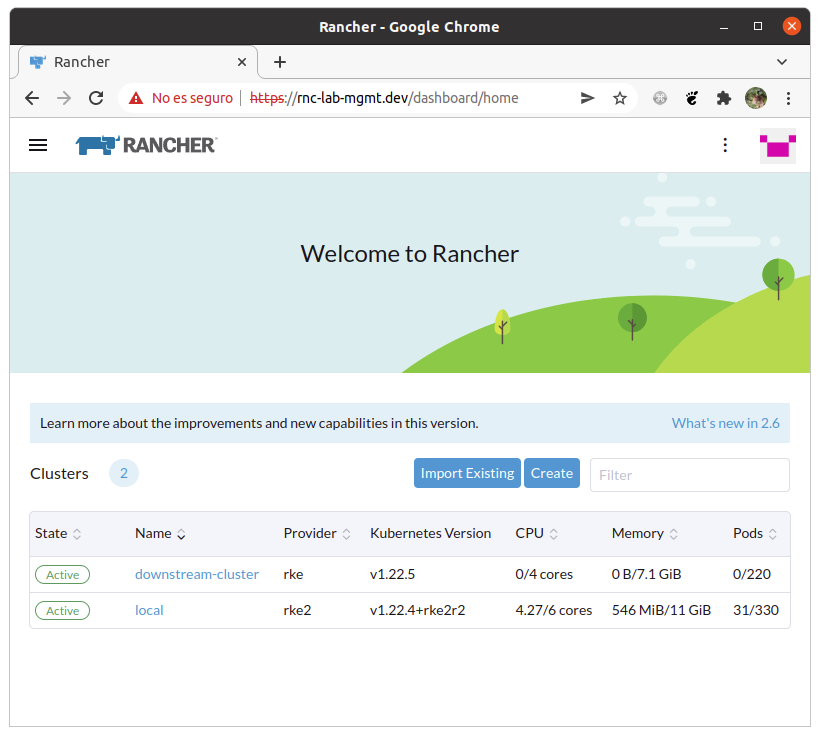

After fixing the version on the cluster_kubernetes_version variable, the next run of the playbook completed successfully, and the new cluster was available on the manager interface.

My final values-managed.yml file contained:

# Should be in roles/nodes_preq/defaults/main.yml

# Helm

helm_version: "v3.5.3"

helm_url: https://get.helm.sh/helm-{{ helm_version }}-linux-amd64.tar.gz

http_proxy: ''

https_proxy:

no_proxy:

# Customizable values for deployments

cluster_api_key: 'token-g55jm:hlprxnvwvnrch5chwdhnl9tnx8bdfmkj2x2dpsj6j8k7wtqp954qhv'

cluster_kubernetes_version: "v1.22.5-rancher2-1"

cluster_name: downstream_cluster

cluster_rancher_manager_host: rnc-lab-mgmt.dev

Conclusions

This was my first experience deploying rancher, despite the playbooks were for personal use, and had some issues were playing them, they allowed me to deploy a working manager cluster and a managed cluster. Now I have a playground where I can test Rancher’s functionalities and deploy some workloads. Thank Juan!

References

- Rancher home page: https://rancher.com

- Rancher cluster manager deployment by BoulderES https://github.com/BoulderES/rancher_manager_playbook

- Rancher managed cluster deployment by BoulderES https://github.com/BoulderES/rancher_managed_playbook

- My homelab repo https://github.com/juanjo-vlc/homelab/tree/main/rancher