Connecting cerebro to elasticsearch

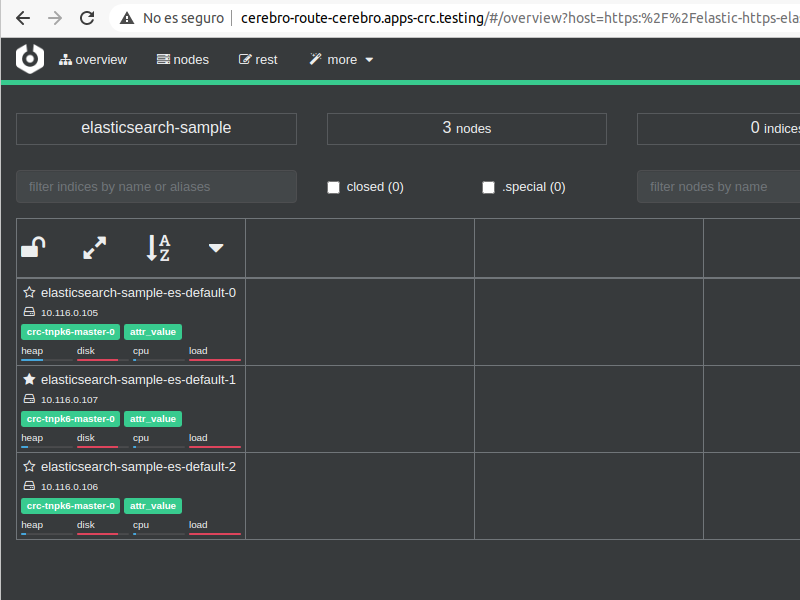

Once cerebro is up and running, I wanted to connect it to an elasticsearch cluster and it was also a good opportunity to try out the elasticsearch operator for kubernetes.

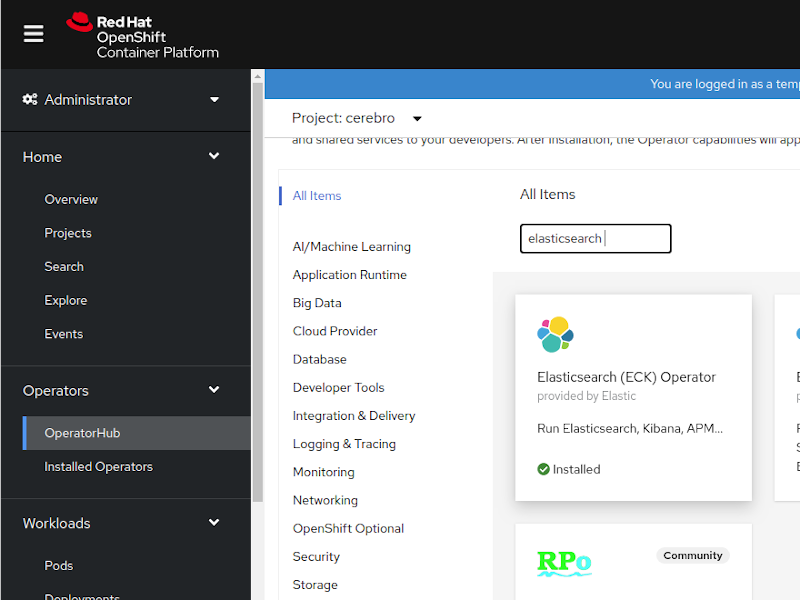

Installing the operator is as easy as navigate to the OperatorHub pane on the Operators menu section, search for elasticsearch and click on the Elasticsearch (ECK) Operator with the provided by Elastic note.

You’ll be prompted about installing it for all namespaces o for a specific one, I installed it for all namespaces so I can create elasticsearch clusters on several projects.

Once installed it will show on the Installed operators pane, clicking on it will take you to the operator details page where you can choose between several options, I only wanted the Elasticsearch Cluster, so I clicked on “Create instance” and I accepted the default values, because this wasn’t my objective for today.

Once the cluster was up and running I noticed there wasn’t any route to the port 9200, maybe that was my fault for leaving blank the HTTP section of the instance creation form. So I added a route manually and tested it from my computers command line curling to /_cat/nodes API endpoint.

Then the time to test my cerebro deployment came, and surprisingly, it failed. I messed a while with network policies despite documentation states the pods are accessible by default, and then, I said to myself: “why don’t you read the logs”, and there was the key, the certificate used by the elasticsearch cloud wasn’t issued by a public CA, so that was what I needed to fix.

On lmenezes/cerebro GitHub repository there are several issues regarding the certificate validation, and the fix was adding a directive on the configuration file.

diff --git a/conf/application.conf b/conf/application.conf

index 89f63a6..506f00b 100644

--- a/conf/application.conf

+++ b/conf/application.conf

@@ -23,6 +23,8 @@ data.path = ${?CEREBRO_DATA_PATH}

play {

# Cerebro port, by default it's 9000 (play's default)

server.http.port = ${?CEREBRO_PORT}

+ ws.ssl.loose.acceptAnyCertificate = false

+ ws.ssl.loose.acceptAnyCertificate = ${?CEREBRO_ACCEPTANYCERT}

}

es = {

I added it and pushed it back to my cerebro’s fork in order to have it merged to the original repo.

After testing the change as a local app, I generated a new docker image and pushed it to my dockerhub repo so I can pull it from my crc environment.

I updated my deployment with the CEREBRO_ACCEPTANYCERT variable set to true and voilà, the connection is now possible.

As the Elastic Operator has a lot of options and possibilities, knowing a little bit about is a big task added to my todo list, let’s see what I’m able to achieve.