My first deployment

Now I have a nice interface for orchestrating kubernetes, but I know very little about them, some theory but never had my hands on them.

I didn’t want to start with the hello world, and as I have some experience with Docker, I wanted to convert my small log management lab from dockers to kubernetes.

My lab is composed by rsyslog, haproxy, graylog, elasticsearch, cerebro and grafana containers, and I choose cerebro for starters thinking it was going to be the simpliest option.

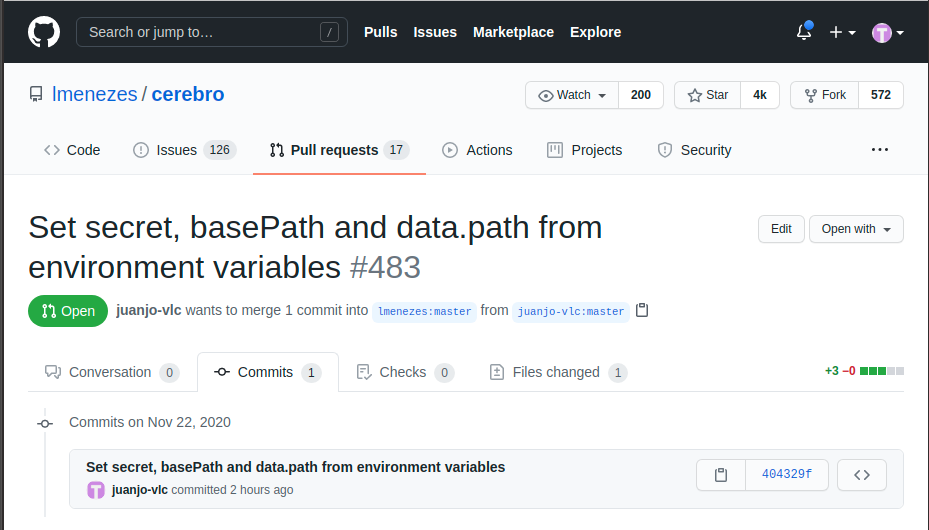

But there was something I wasn’t expecting, cerebro tries to write it’s config and state on a db file inside it’s working directory, and that was not allowed. I needed that path to be picked up from the environment variables, but it wasn’t designed to be that way, so I changed it and asked for a pull request.

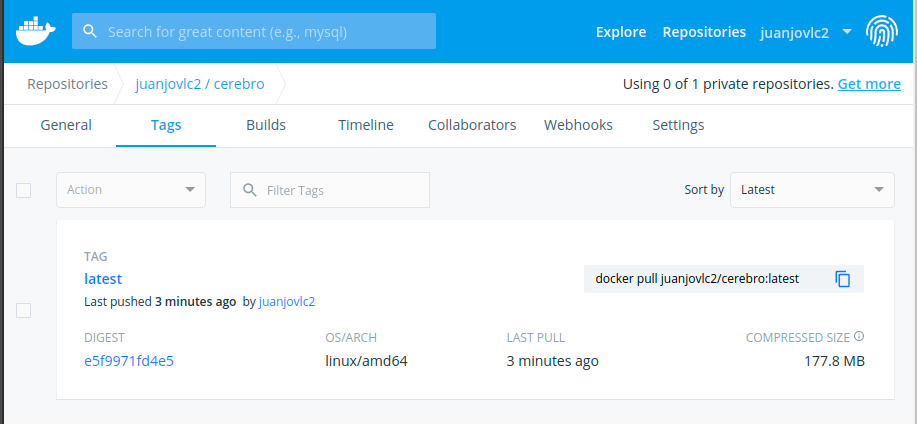

But I didn’t want to wait until the author accepts my changes so I had to build my own docker image to be able of pulling it into crc.

Running cerebro from a compose file is very simple, but it wasn’t very difficult getting a k8s deployment yaml from a docker-compose.yml.

docker-compose.yml

version: '2'

services:

cerebro:

image: lmenezes/cerebro:latest

ports:

- "9000:9000"

deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: cerebro-deployment

labels:

app: cerebro

spec:

replicas: 1

selector:

matchLabels:

app: cerebro

template:

metadata:

labels:

app: cerebro

spec:

containers:

- name: cerebro

image: juanjovlc2/cerebro:latest

env:

- name: CEREBRO_DATA_PATH

value: /tmp/cerebro.db

ports:

- containerPort: 9000

volumeMounts:

- name: datadir

mountPath: /opt/cerebro/logs

volumes:

- name: datadir

emptyDir: {}

The trick was choosing a volume for the logs, this is a test and I don’t need persistance, so I chose and emptyDir volume for the logs. I put on my TODO list a modification of the docker image to send the logs to the standard output so they can be gathered by crc.

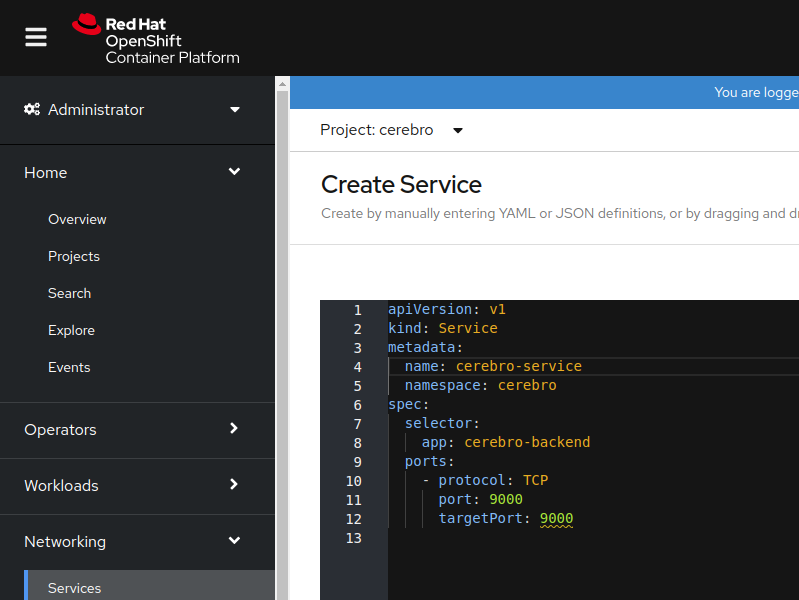

Creating a service

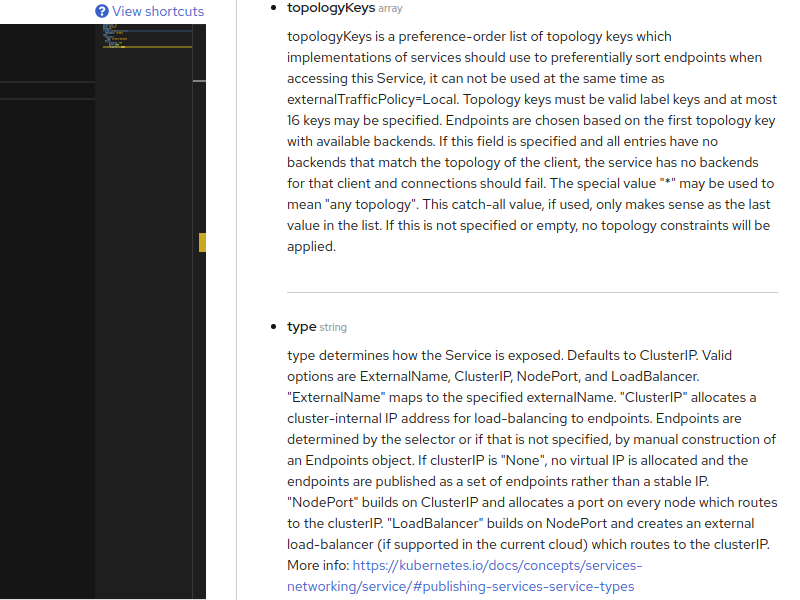

This was new stuff for me, on docker, you map some ports from your host network to the container, but on k8s you have to do it another way, and that way is creating a service first to make it accessible inside the cluster.

OpenShift contextual documentation is handy and keeps you from constantly jumping between tabs on your browser.

Creating a route

Now the pod is visible inside the cluster, but we need some way to make it visible to the outside world. And, I’m running CodeReady Containers, not a fully fledged OpenShift on a cloud provider, having that in mind, I choose to use a route because cerebro is HTTP traffic.

Creating a route is very easy from the web interface.

And the best of all, it works!

Let’s call it a day!