Bitbucket Pipelines III

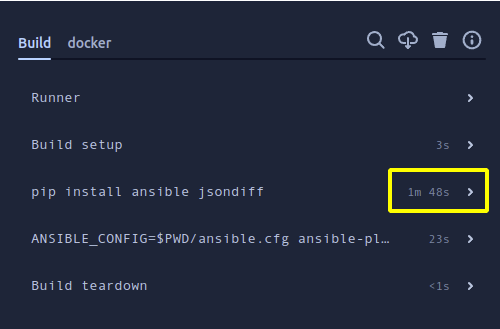

On my previous post I showed how to use bitbucket-pipelines to run ansible playbooks. For that, I installed ansible on an standard python-3.9 container image, it worked but it required about 1 minute and 45 seconds to install dependencies, it doesn’t look a big amount of time, but imagine a team who does hundreds of builds a day, or is working with more packages than ansible and jsondiff.

Building the image

My testing image was pretty simple: ansible, jsondiff and, as a bonus, the hvac library, as I was planning to use Hashicorp’s Vault lookup plugin at some point.

The final dockerfile was:

# syntax = docker/dockerfile:1.3

FROM python:3.9-slim-bullseye

LABEL org.opencontainers.image.authors="juanjovlc2"

RUN useradd -M -s /bin/bash -d /workdir -c "Base user" ansible \

&& install -d --owner ansible --group ansible /workdir

COPY ./requirements.txt /tmp/requirements.txt

RUN --mount=type=cache,target=/root/.cache \

pip install -r /tmp/requirements.txt

RUN apt update \

&& apt install -y openssh-client \

&& rm -rf /var/lib/apt/lists /var/log/*

USER ansible

WORKDIR /workdir

This wasn’t the most possible optimized image, I could have used another buildkit cache for /var/lib/apt, and, as I learned later, bitbucket pipelines are not using the WORKDIR directory, but this was enough for this lab.

First scenario: image hosted publicly on docker hub

The first scenario was to use the publicly available ansible image on docker hub, it was pretty straightforward, I only had to specify the image and it was downloaded from docker hub.

- step:

name: Ansible deployment

image: juanjovlc2/ansible:latest

deployment: production

runs-on:

- self.hosted

- linux

script:

- ANSIBLE_CONFIG=$PWD/ansible.cfg ansible-playbook site.yml $checkMode

services:

- docker

The basic requirement here was my host running the bitbucket runner needed to be able to access registry.docker.io. Remember how it works: the runner mounts the docker daemon’s socket and also the /var/lib/docker directory, so the docker executable in the runner container asks the docker daemon on the host to download the image.

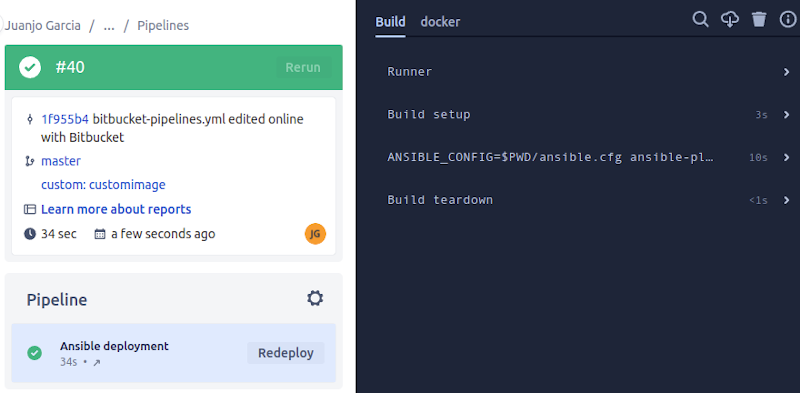

As we can see, the entire pipeline run is only 34 seconds, much less than the previous setup.

Second scenario: image hosted on private repository

In some cases, our code can’t be stored on public repositories because of some corporate practices or rules, sadly, not everybody is creating OpenSource software.

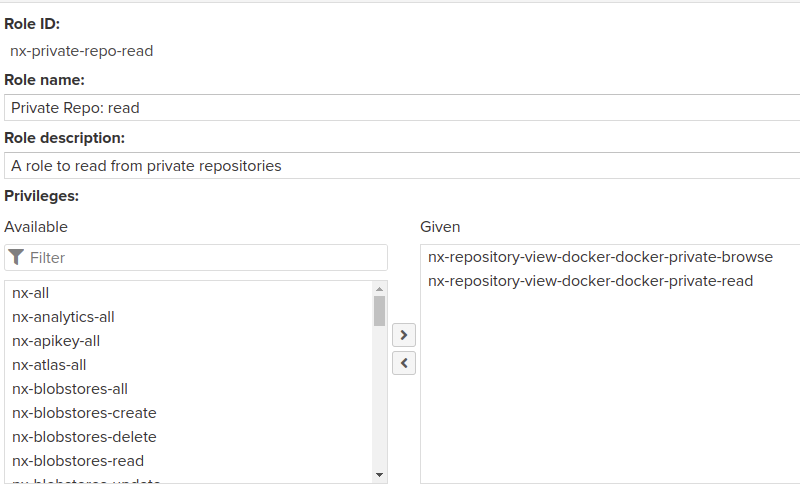

The first thing I did was creating a user on my local sonatype nexus 3 repository. For that, first I created a couple of roles, a reader and a writer, even though only the reader was needed, but if I want to create images using pipelines, it will come handy.

In this case I chose the read and browse permissions for my private repository.

I added a new step to my bitbucket-pipelines.yml to use the image hosted on the private repo.

- step:

name: Ansible deployment

image:

name: anthrax.garmo.local:8083/juanjovlc2/ansible:latest

username: $PRIVATE_REPO_USERNAME

password: $PRIVATE_REPO_PASSWORD

deployment: production

runs-on:

- self.hosted

- linux

script:

- ANSIBLE_CONFIG=$PWD/ansible.cfg ansible-playbook site.yml $checkMode

services:

- docker

Note that the image field was no longer a string indicating the image, but an object where the property name contains the image’s url, the username contains the user for connecting to the private repository and the password field its passwords.

Bitbucket allows variable substitution on the username and password fields, as I used on my example, that allowed me to keep them secret using encrypted repository variables. Unfortunately the name field didn’t allow variable substitution.

Again, the host running the pipeline runners should be able to access the private repository.

Extra: learnt by serendipity

While I was working on using private images, I also learned about several things I wasn’t looking for. I knew they where there, but I hadn’t tested them prior to this lab: caches, custom pipelines and pipeline variables.

Regarding the caches, I had seen some colleagues using them, but I didn’t know how they worked. In a few words:

They are WORMs (Write Once Read Many, like and old cd-r), only the first deployment which uses them can write to them. Having this in mind, one should design the pipeline in a way that the first step will warm the cache with every dependency needed. As they are cleaned after a week or by hand, a custom pipeline to warm it would prove useful.

pipelines:

custom:

warmcache:

- step:

caches:

- pip

- wheels

name: Lint code

runs-on:

- self.hosted

- linux

script:

- pip wheel --wheel-dir=/wheels flake8 ansible jsondiff hvac

definitions:

caches:

wheels: /wheels

In this example I’ve used the predefined cache pip and the custom cache wheels to store the python libraries. In order to take advantage of them, in another step which uses some of the pre-cached libraries, the following can be used after including the caches on the step definition:

pip install --no-index --links-from /wheels ansible jsondiff hvac

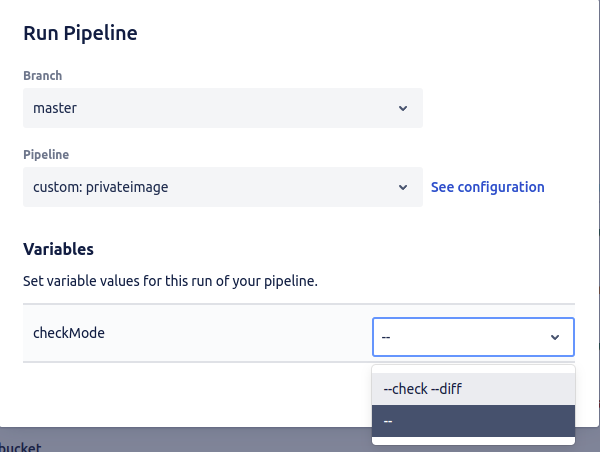

Regarding custom pipelines and variables, they make a great team. When creating ansible playbooks is very common to run them with --check --diff to be aware of whats going to be changed, so I used the variables and a custom pipeline to run my playbook either in check mode or for real.

privateimage:

- variables:

- name: checkMode

default: "--"

allowed-values:

- "--check --diff"

- "--"

- step:

name: Ansible deployment

image:

name: anthrax.garmo.local:8083/juanjovlc2/ansible:latest

username: $PRIVATE_REPO_USERNAME

password: $PRIVATE_REPO_PASSWORD

deployment: production

runs-on:

- self.hosted

- linux

script:

- ANSIBLE_CONFIG=$PWD/ansible.cfg ansible-playbook site.yml $checkMode

services:

- docker

In this example, I could use the variable checkMode to include the --check and --diff flags. I tried to use the empty string "" or even a hash # as not check option, but neither of them worked, I’m sure the hash was escaped by the executor, but I don’t know why the empty string failed. Fortunately, the double dash -- worked as recommended by the POSIX conventions as a parameter delimiter and I used it as no check flags option.

When using a list of available parameters, they are shown as a dropbox.

Conclusions

Atlassian made pretty easy working with custom images on bitbucket pipelines, creating the appropriate image for our custom build can save us a lot of building time. For instance, if you have all your test dependencies ready on a container image, using that image for performing the tests will speed up things a lot. I’m not only talking about python or npm packages that can be cached using bitbucket predefined caches, in some cases you need test content that is not well suited for storing on VCS. Or, as is my case, the execution framework to run my playbooks.

References

-

Speed up pip downloads in Docker with BuildKit’s new caching by Itamar Turner-Trauring

https://pythonspeed.com/articles/docker-cache-pip-downloads/ -

Posix utility conventions

https://pubs.opengroup.org/onlinepubs/9699919799/basedefs/V1_chap12.html#tag_12_02 -

Use docker images as build environment on BitBucket docs

https://support.atlassian.com/bitbucket-cloud/docs/use-docker-images-as-build-environments/ -

How Caching Can Save Build Minutes in Bitbucket Pipelines by Stephan Schrijver

https://levelup.gitconnected.com/how-caching-can-save-build-minutes-in-bitbucket-pipelines-219d310ab277 -

Caches on BitBucket docs

https://support.atlassian.com/bitbucket-cloud/docs/cache-dependencies/ -

The code for this lab in bitbucket

https://bitbucket.org/juanjo-vlc/pipelines/src/pipelines-iii/